The Technologist

Issue 1. The newsletter that simplifies one tech concept for you weekly.

Welcome to The Technologist!

A quick introduction to the inaugural newsletter.

This newsletter was inspired by one of my favorite Tech Concierge customers, a retired executive who has spent more than two decades serving on a range of private and public corporate boards of directors. I see myself as her personal “Q” — and just like James Bond’s tech-quartermaster, I am always thinking about the tools and training I can provide to give her any kind of advantage.

Recently we did a quick deep-dive into talking with AI on her iPhone, and I swear I have never seen her having more fun.

So as the rest of my week went by, I watched dozens of long-form Youtube videos, read dozens of PDF reports, news articles, Tweets and more related to AI. It’s part of my job to always be learning. Of the hundreds of things I found, I hand-picked about half a dozen, maybe the top 1% that I thought she would love, and I started sending links.

Then, just a few days later, I received a text from her:

I also realize that there are several links that you have sent me that I haven’t had a chance to watch, but I will

On the surface this seems fine. But in just a few seconds, I realized I had made a mistake.

When you get to know me, you realize that I am a Tortoise. While some of my lawyer friends are the human incarnation of a Hare capable of processing volumes of information incredibly fast to make deadlines, I am the opposite. I need time to process, to think through things deeply. This is what you want in a technologist. What I lack in velocity I make up for with superhero-level EQ and human skills.

So when I saw her text I instantly realized, OH NO, THIS IS BAD.

In my excitement to share a few exciting AI-related articles, I accidentally broken one of my golden rules of service: Never upward delegate.

Even just the few links I had shared represents 2~3 hours of consumption time. An hour-long Youtube video, a 180 page report, a tweet attached to a thread of 25 tweets and 100 comments. All good stuff, but way more of a commitment than sending a cute picture of my dog. Basically, my job is to save her time, not cost her time.

So at that moment I saw an opportunity:

What if I summarize one concept each week, supported by links, quotes, and other media, make it easy to read, and make it brief so it’s easy to consume in less than 10 minutes?

I replied back to her text almost instantly:

Oh no! I have an idea. Please don’t try catching up on all of those links- Let me send you something that summarizes them in an executive way, so you get the main point of each in under 1-2 minutes, and if you feel like going deeper you can.

And that is how The Technologist newsletter that you’re reading was born.

This week, let’s talk about Edge AI and what the media may have missed in Apple’s announcements.

Key points we’ll cover:

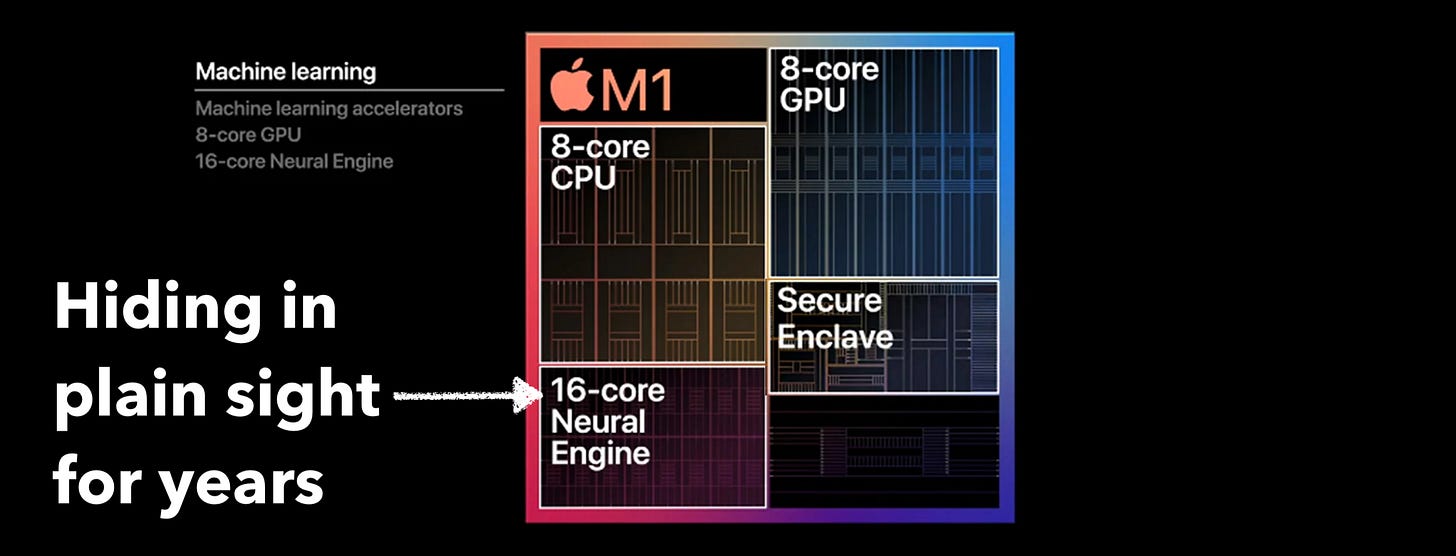

Apple’s Edge AI strategy unveiled: It’s the same since at least 2017.

“Apple Intelligence” chips (you probably own a bunch and just don’t know it yet)

The difference between Cloud AI and Edge AI

Why Edge AI is better for privacy

Microsoft’s Recall is an example of Edge gone wrong

Two quick comments and probably the most important reading you can do this month

Where Apple is going with AI.

I can’t believe it’s only been one week since Apple’s WorldWide Developer Conference and Apple is already out of the news. The media is rabid for anything AI, and I think because of that they’re missing on some of the most important, subtle and nuanced things that are happening, things so big that investors will be wringing their hands a year from now wondering how they could have missed it.

At Apple’s developer conference last week Apple finally shared its vision for AI, which the Apple leadership team repeatedly referred to as “Apple Intelligence.”

This moniker actually gives a massive clue to Apple’s long-term strategy: Edge AI.

Edge computing is the opposite of Cloud computing. “Edge” devices — our iPhones, Apple Watches, Macs, iPads are Internet-connected. Yet they live on the outer edges of the Internet.

What’s interesting is that Apple has been planning this for a long time. At least since 2017 when they quietly introduced “Neural Cores” to the tech spec of every device they make, starting with the iPhone.

Starting in 2017 Apple has been building and selling incredible silicon of its own which have faster and faster neural cores, chips that specifically enable brain-like activity that is the basis for neural learning. Basically, Apple has, YEARS BEFORE we ever heard of ChatGPT, been providing every iPhone and iPad and Mac user with AI chips that we haven’t been using. Yet.

And believe me, this is a Very Big Deal.

Apple’s very public little secret that you already own.

There are two points I want you to think about related to Apple’s strategy.

First, Apple believes the majority of AI processing should live on-device, meaning on the device you own. The Internet just supplements AI with new sources of information, updates, etc. Apple has over 14 years of experience with this privacy model already, since Siri launched.

The second thing to think about is scale. Apple announced that they’ll allow ChatGPT as an agent on iPhones, which means they are bringing ChatGPT potentially to a network of over 2 BILLION people. Compare that to OpenAI’s 100 million users. The difference in scale is massive.

In fact, if you own Nvidia or Broadcom stock, you may want to pay close attention to one small detail Apple unveiled last week: Apple already built out entire data centers designed to “cloak” communication, serving as a privacy proxy for iPhone users needing to interact with ChatGPT on the Internet.

They built these data centers with Apple Silicon.

Think about that. Wall Street is in a frenzy with Nvidia, and to the most cynical of us appears to be pulling what looks like a repeat of overvaluation of Cisco back in the 1990s as the Internet plumbing was being plumbed.

And while Nvidia continues charging more and more to augment and supplement compute for 100 million ChatGPT users, Apple demonstrates that 2 BILLION users can be served with compute investment that didn’t get a lot of fanfare. Especially given Apple made it from scratch and made a LOT of it.

Apple has been, for seven years already, quietly and publicly building a different AI network: Our devices. If you have time, I recommend listening to Emad Mostaque, the founder and former CEO of Stability AI talk about the important freedom and privacy aspects of AI with edge computing.

Nvidia makes chips so big they fit into telephone-booth sized racks that need a building with custom coolant plumbing and electricity, and each rack costs about $3 million USD. The building they go in cost half a billion USD to start. Nvidia and other companies building massive centralized Cloud computer is still important, but less so to regular day to day life. The massive supercomputers that are approaching Trillion dollar investments will be the technology that help us create AGI (Artificial General Intelligence) and then later super intelligence, both of which are tools that will be life changing at a planetary level, solving problems humans have been unable to solve within the limits of our brains.

DISCLAIMER: I don’t own any stock in any of the companies named in this newsletter. I am not a financial advisor and I don’t play one on the Internet. But if I was, I would be looking at the fact that Apple was able to build out infrastructure for 2 billion people while Nvidia and Open AI are still challenged with 100 million and I would be a little worried about Nvidia. Just something to think about.

Edge is the type of AI that ultimately makes sense for most of us.

While all the news’ attention has switched back to Nvidia’s monstrous valuation, I think Apple’s long-term strategy for AI makes more sense than anything we’ve seen to-date.

None of us ever saw C3PO or R2D2 freeze up in Star Wars because of a WiFi problem.

And in a world where privacy matters, having a private conversation with your AI means that contents of that conversation don’t travel across and through millions of servers in the cloud.

Why can’t your AI have two capabilities? One to reach out to the Internet to get more skills for the conversation you need to have with it (think “jacking in” to the Matrix to “learn kung-fu”) while the conversation you’re having with your AI about something incredibly private stays put on your device, where your AI lives.

Then there’s the exact opposite of privacy.

Edge AI doesn’t automatically mean good privacy.

As I round up this week’s most interesting AI news, I have to bring up what looks like the Le Gare Montparnasse of major AI initiatives.

The CEO of Microsoft this week announced that the new version of Windows 11 will have a built-in technology called “Recall” where Microsoft Windows would take screenshots of your screen every second and essentially record everything you do. These millions of screen shots will be saved to the hard drive. The Windows PC wil feed all of these screenshots into Microsoft Copilot, their desktop AI, so the AI can better help you when you have questions about what you are working on.

If Apple’s move to Edge for privacy makes sense, one had to wonder who Microsoft had in mind for Recall. Because the first use case that came to mind for me was perhaps call centers with extremely low-skilled labor. Maybe Recall is a training tool for call centers that can’t afford AI agents in the first place, so Recall is designed for extremely low-level call centers filled with the the cheapest (read = low skilled) labor?

But Sadya Nadella came out publicly and very proudly introduced Recall as a feature for everyone.

In the 30 years that I have been Tech Concierge to executives, the conversation about switching to Mac almost always comes up when the pain of using a PC becomes so great that my offer to seamlessly switch them to Mac becomes more and more attractive, and ultimately irresistable. But with Recall on the horizon for every Windows PC, I expect a lot of executives and other discerning professionals will want to switch to Mac.

Initial market feedback to Microsoft.

The feedback from everyone in the industry from large corporate customers to the world’s most successful tech Youtubers has started with looks of horror.

Microsoft has just announced that the feature will ship disabled on all new PCs, but even Youtubers say that Microsoft is not to be trusted, as there are countless examples of the company forcibly upgrading customers to features they don’t want and making it extremely hard if not impossible to roll back. OneDrive is a good example of this, where it actually impacts all of your data and prevents popular backup software from backing up your data.

What I am eagerly waiting for most this week.

There’s so much news on latest versions of different AIs that it’s become more noise that news. It’s awesome to see the advancement, but what we want most are game-changing results in our lives.

For now, what I want to see the most is the new Voice model for ChatGPT 4(o) that supports live visual inputs. What this means is that really soon- in a matter of days, you’ll be able to use a support arm with an iPhone clip to hold it above or pointed at something you’re working on, and you and the AI will be able to talk and discuss what you both see in real-time, together.

I think this is going to be a game-changer. My older son is preparing for Junior High School midterms this week, and I have created a ChatGPT coach to work with him, not to do his homework, but to coach my son on the concepts and guide him one step at a time until my son can not only answer the questions, but also validate that he really understands the material he’s being tested on. Right now I upload pictures of textbook pages and other materials to ChatGPT. For it to have live vision soon is going to make the teaching experience even better.

Finally, some important reading re AI timelines

It turns out we are much closer to the far away future than we think.

Leopold Aschenbrenner just released a report this month titled Situational Awareness. One sentence on the opening page summarizes what you’re about to discover in this surprisingly easy to read document:

Everyone is now talking about AI, but few have the faintest glimmer of what is about to hit them.

There is a full PDF linked here at https://situational-awareness.ai/

Aschenbrenner is a former OpenAI insider and was part of their AI safety team. The report covers a lot of AI basics, if you’re not already familiar with how AI works, the report is easy enough to read to give you some history while simultaneously guiding you the reader toward what we should be concerned about: The next global race for what is potentially much more dangerous than the nuclear bomb, and how American and China will both likely react.

I highly recommend printing out and reading (or airdrop to your iPad and read) Aschenbrenner’s report. What surprised me the most is that we’re probably a matter of several months to 1-2 years away from the entire world changing in ways we can’t even imagine right now.

How was the first newsletter?

If you made it here, you’re at the end of the newsletter. Was it worth it? Did you learn something valuable to you?

As with anything, consistency itself is the basis of continuous improvement. I will work on getting more concise, writing better and sharing ideas that I think really matter in a more succinct way.

My plan for each week is to pick one topic or subject that is either wildly relevant to navigating our near-term future, or is immediately beneficial given the current local, national or global situation, the kind of matter that a customer would hire me to address and/or solve for $50,000 or more. This way there’s extreme business value in this newsletter, not just more opinions of which there is no shortage on social media.

Please forward this to everyone in your network who you think will benefit from it. The web site for signing up for the newsletter (free) is www.techconcierge.pro